Nvidia’s $1 billion investment in Nokia set off big reactions across telecom and tech. The deal aims to speed up AI in radio access networks, often called AI‑RAN. It also links Nvidia’s GPU platforms to Nokia’s portfolio as the industry inches toward 6G. The headline is bold. The real question is who gains most, and when.

Quick context

Nokia needed a clear win. It lost ground to Ericsson and Samsung in key operator accounts, then reoriented toward data centers after buying Infinera. Its new CEO has a strong background in compute and AI, which aligns with Nvidia’s pitch. The partnership signals that Nokia wants to be at the front of AI‑enabled networking, not just follow.

What is AI‑RAN, in plain terms

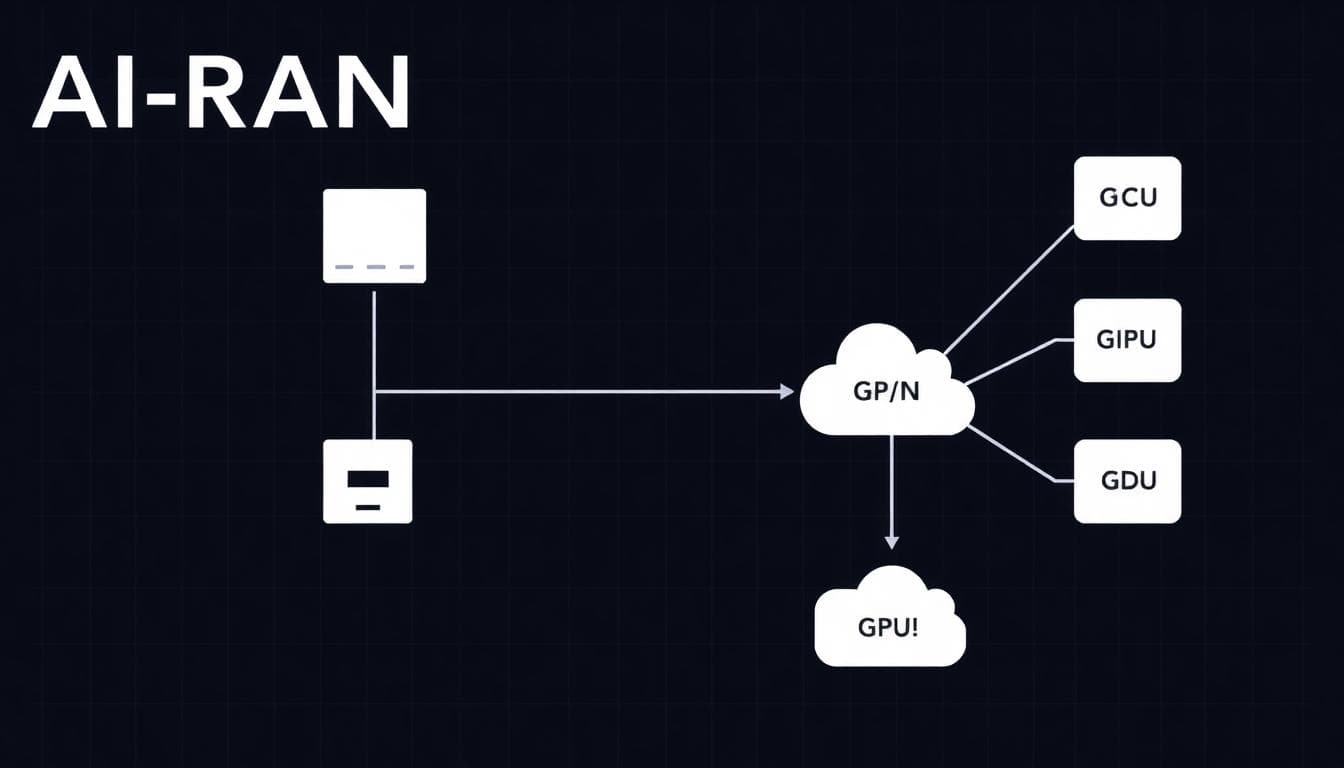

AI‑RAN brings GPU‑accelerated intelligence into the radio access network. Think of traffic prediction, interference management, beamforming, and scheduling getting help from machine learning. The goal is better performance, higher spectral efficiency, and smarter energy use. GPUs sit in the DU or CU layer and run models that adapt to changing radio conditions in near real time.

Why this could be big for Nvidia

- Market expansion: Telecom becomes a new lane for GPUs beyond data centers.

- Platform pull: If Nokia bakes Nvidia’s platform into radios and RAN software, Nvidia gains a beachhead at scale.

- Recurring revenue: AI‑RAN workloads can grow with traffic and features, creating ongoing demand for accelerators and software.

- 6G timing: Big RAN investments typically cluster late in cycles. If AI‑RAN proves itself, Nvidia can ride the 6G capex wave.

In short, the upside for Nvidia could be large if operators buy in. The investment looks modest compared to potential long‑term revenue from AI platforms deployed across thousands of sites.

Why it helps Nokia now

- Strategic narrative: A stronger AI story for customers and investors.

- Portfolios converge: Nokia’s data center push and optical assets pair nicely with AI‑accelerated networking.

- Differentiation: A route to stand out against Ericsson and Huawei, especially in open and cloud‑native RAN.

- Ecosystem gravity: Tighter ties with a top AI supplier can attract developers and partners.

For Nokia, this is both practical and symbolic. It bolsters product direction and signals momentum during a competitive period.

The operator perspective: benefits and sticking points

Operators want better spectral efficiency, lower churn, and leaner energy use. AI‑RAN promises gains on all three. But there are hurdles.

- Cost: GPUs are expensive. Deploying them across many sites adds up.

- Power: Energy is a major operating expense. Accelerators increase power draw.

- Integration: Blending AI models with live RAN control loops is hard and must be reliable.

- Pace: Telcos move carefully. They need proofs, standards, and vendor roadmaps they can trust.

Some major operators are not yet convinced that GPU‑centric designs beat CPU or ASIC paths for every use case. Expect trials in urban hotspots and centralized RAN sites first, where density and data improve the ROI.

Winners and when they win

Here is how the benefit curve could play out if AI‑RAN meets expectations:

- Nvidia: Early platform wins, developer mindshare, and longer‑term volume as AI features mature.

- Nokia: Near‑term momentum, differentiated offers, and improved pull with cloud‑minded customers.

- Operators: Gains show up after careful pilots; benefits tied to traffic mix, spectrum, and site power budgets.

- Partners: Cloud providers, integrators, and app vendors plug into the stack where it makes sense.

Key technical questions to watch

- Latency budget: Can GPU inference fit tight RAN control loops without degrading performance?

- Energy profile: Do AI features cut total site energy enough to offset accelerator draw?

- Model lifecycle: Who trains, verifies, and updates models across thousands of cells?

- Standards and openness: Will solutions interoperate across vendors in open RAN settings?

- Placement: Which sites need accelerators on‑prem versus in regional data centers?

What this means for 6G

6G research emphasizes intelligent air interfaces, sensing, and tighter cloud integration. If AI‑RAN proves itself in 5G Advanced, it will influence 6G designs and spending. Nvidia wants its platform to be the default for that future. Nokia wants its RAN, transport, and data center assets positioned to capture spend when operators refresh networks later this decade.

For network planners: a simple action plan

- Start with targeted pilots in dense areas or centralized RAN hubs.

- Measure spectral efficiency, user throughput, and site energy before and after AI features.

- Model TCO under different accelerator placements and utilization levels.

- Demand vendor transparency on power, latency, and model governance.

- Build a fallback path; design AI features to degrade gracefully if accelerators are offline.

Nvidia appears to have the largest upside if AI‑RAN scales, thanks to platform pull and long‑tail workloads. Nokia gains a timely strategic lift and a stronger AI narrative. Operators can benefit, but only where performance and energy savings beat the added cost and complexity. The pivot point will be real‑world trials over the next 12 to 24 months. If those show clear ROI, AI‑RAN will move from buzz to baseline in the run‑up to 6G.

To contact us click Here.